Evaluate quality of knowledge base responses

Response quality evaluation addresses several key tasks:

- Determine how effectively the knowledge base meets typical user queries.

- Optimize response quality by adjusting settings and analysing results.

- Monitor changes in response quality over time as data sources are updated.

To evaluate response quality, you need at least the KHUB_EDITOR role.

How evaluation works

For quality evaluation, a test set is used, consisting of queries to the knowledge base and their expected responses. Each query is sent to the knowledge base, and the received response is then passed to the LLM along with the query and expected response. The LLM rates the quality of the actual response on a scale from 1 to 10. The final score is the average of all scores across the test set.

The more questions included in the test set, the more reliable the resulting score will be.

Response quality can be assessed using multiple test sets, with each evaluation conducted independently.

Prepare test set

You can create a test set yourself or generate it using an LLM.

- Create manually

- Generate by LLM

-

Navigate to the Quality evaluation section.

-

Download the test set template: click Upload under Test sets, and then click Download XLSX template in the upload window.

-

Add your queries and expected responses to the file.

-

To evaluate response quality by individual knowledge base segments, add the Segments column to the test set:

- If answering a question requires searching within specific segments, list them separated by commas.

- To search unsegmented sources, specify

include_without_segments. - To search the entire knowledge base, leave the field blank.

Example:

Quality control,include_without_segments. -

In the upload window, enter a display name for the test set and attach the completed file.

-

Click Create.

Under Test sets, you can download the test set.

-

Navigate to the Quality evaluation section.

-

Under Test sets, click Generate.

-

Specify the test set parameters:

-

Name to display in the list of test sets.

-

Knowledge base segments, if you need to evaluate the response quality for individual segments.

-

Language model for generating queries and responses.

-

Number of queries per source document and the maximum number of queries in the test set.

-

Prompt for generation. You can define a specific style or types of questions (e.g. comparative, step-by-step), add examples, or change the language.

caution- To view and edit the prompt, you need the

KHUB_ADMINrole. - The prompt defines the response structure that the system expects from the model. An accidental change to this structure (such as deleting a field) will prevent the system from processing the response, causing the test set generation to fail. Make changes with caution.

- To view and edit the prompt, you need the

-

-

Click Create.

Generating a test set using an LLM can take a significant amount of time, potentially up to several hours, depending on the number of queries required.

Under Test sets, you can:

-

Track the generation status.

-

Cancel the generation if necessary.

-

Download the generated test set.

Start evaluation

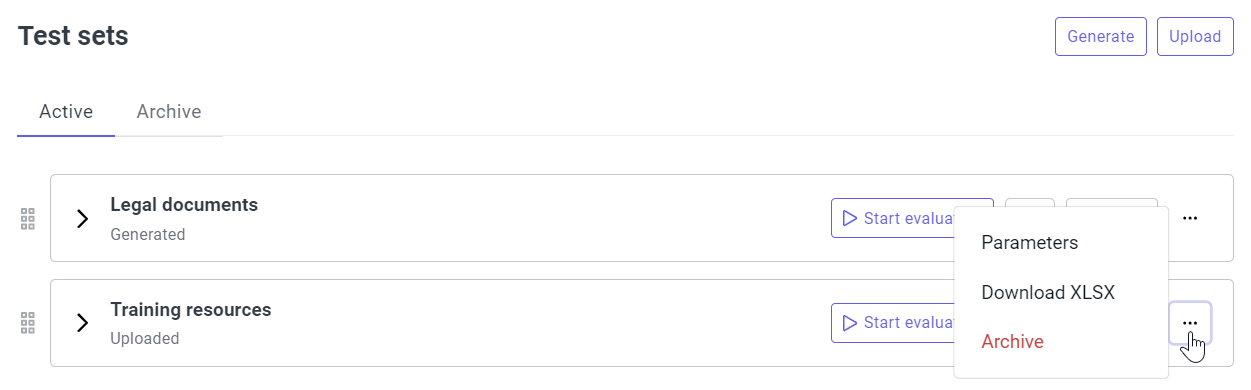

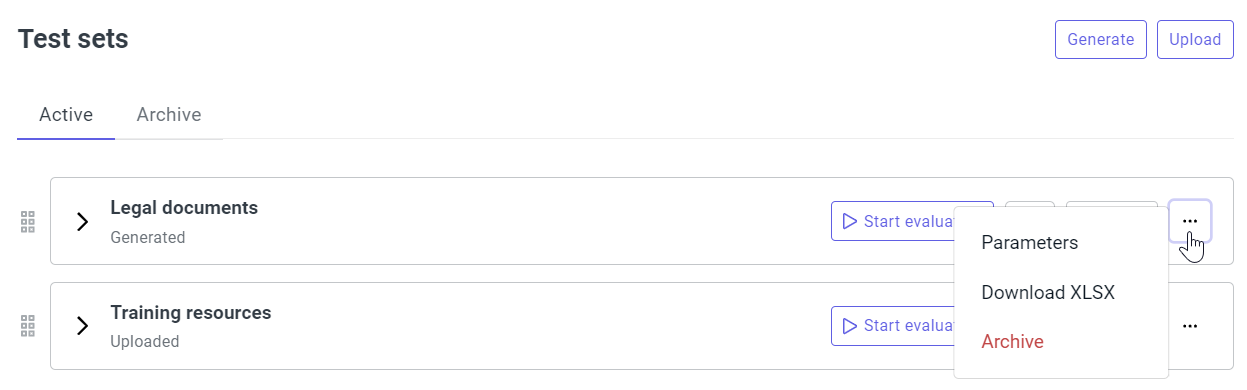

To modify settings before starting an evaluation:

-

Click next to the test set.

-

Select a model from the list.

-

In the evaluation prompt, you can adjust the scoring scale, clarify criteria for lowering a score, or add examples.

caution- To view and edit the prompt, you need the

KHUB_ADMINrole. - The prompt defines the response structure that the system expects from the model. An accidental change to this structure (such as deleting a field) will prevent the system from processing the response, causing the evaluation to fail. Make changes with caution.

- To view and edit the prompt, you need the

-

Click Save and start.

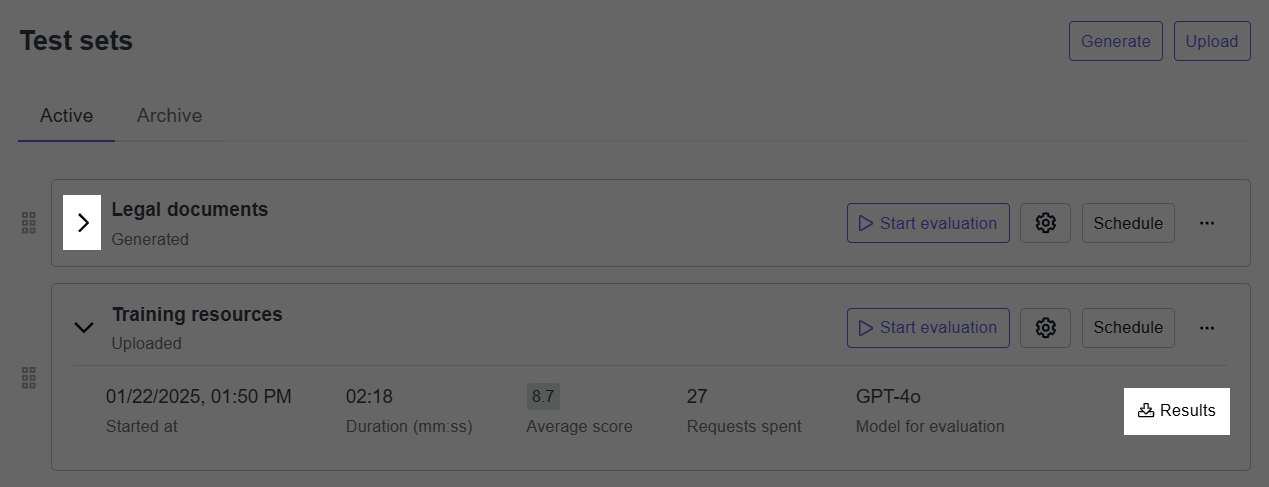

To start the evaluation with the current settings, click Start evaluation next to the test set.

The evaluation process can take a significant amount of time, potentially up to several hours, depending on the number of queries in the test set.

View evaluation results

To download a detailed report with scores for each query in the test set, click next to the test set, and then click Results for the evaluation.

Set up schedule

To schedule an evaluation:

-

Click Schedule next to the test set.

-

Specify the frequency and start time.

-

To skip the scheduled evaluation if there are no updates to the knowledge base since the last evaluation, use the options under Evaluate only on updates:

- On data source updates: start evaluation only if the knowledge base data has been updated.

- On project settings updates: start evaluation only if project settings have been updated.

- Enable both options to start evaluation whenever there are any updates.

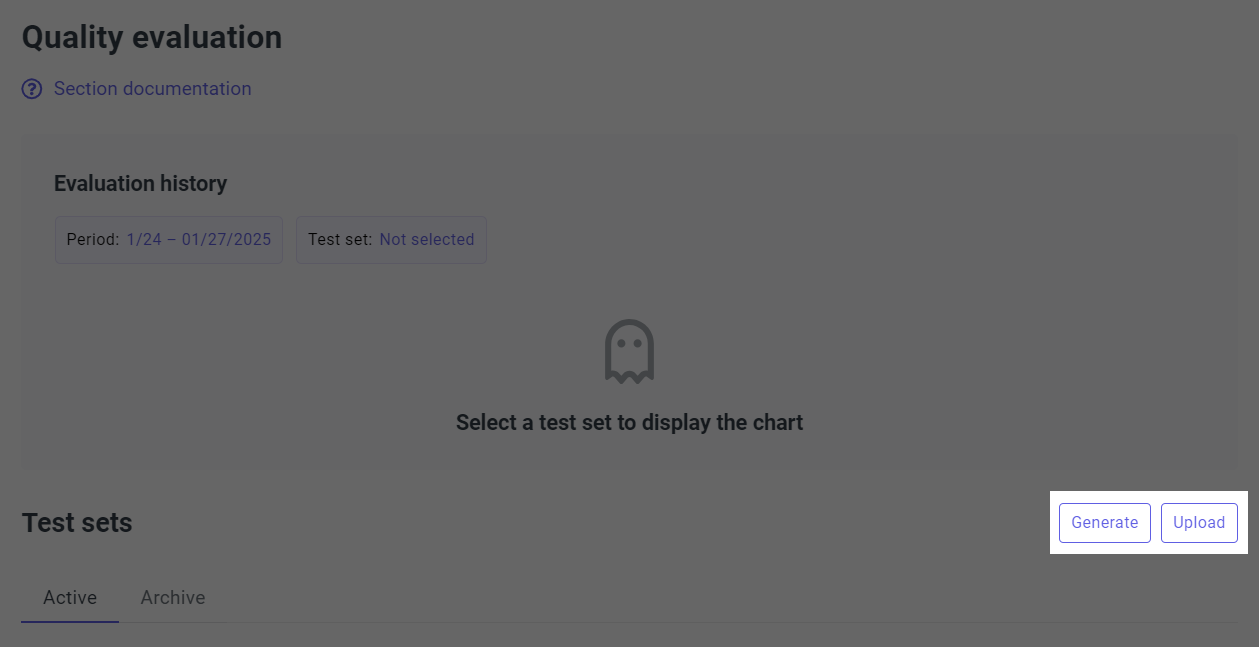

View evaluation history

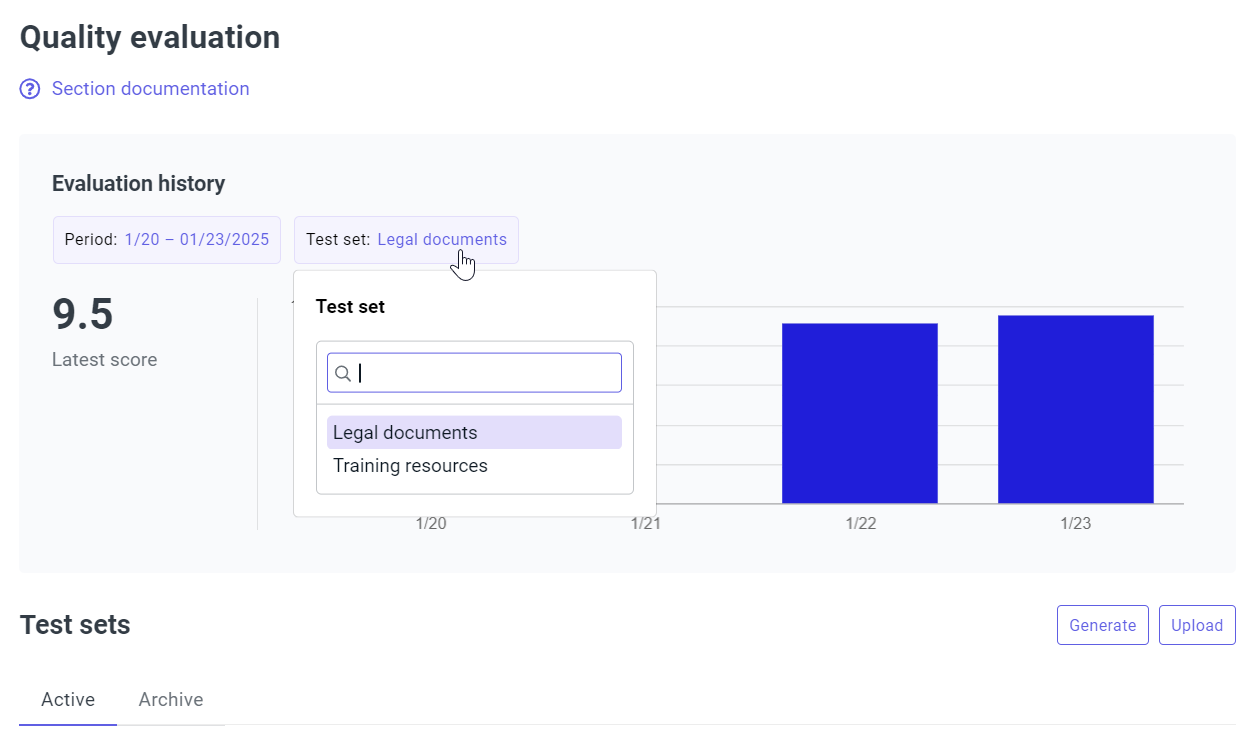

The evaluation history is available separately for each test set.

To view the chart, select the period and test set under Evaluation history.

To view the list of evaluations, click next to the test set.